第3回 プライベートクラウドを構築してみる~その2~

Tweet

シダ部長

若い頃は色々な大規模開発プロジェクトで開発者として辣腕を振るい、その後は経験を活かし炎上プロジェクトの火消し人や、先進的技術の R&D などに携わり「受け攻めのシダ」の異名をもつ。

ハブカくん

シダ部長の指揮する部署に配属されたばかりで、シダ部長のもとで流行りの「クラウド」について七転八倒を繰り広げる。

竹本トミー

未来の世界のハコ型ロボット

ConoHa Advent Calendar 2015 4日目

root@133-130-116-204:~# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 49G 1.6G 45G 4% / none 4.0K 0 4.0K 0% /sys/fs/cgroup udev 7.9G 12K 7.9G 1% /dev tmpfs 1.6G 396K 1.6G 1% /run none 5.0M 0 5.0M 0% /run/lock none 7.9G 72K 7.9G 1% /run/shm none 100M 0 100M 0% /run/user

root@133-130-116-204:~# cat /proc/partitions major minor #blocks name 253 0 52428800 vda 253 1 51380224 vda1 253 2 1 vda2 253 5 1045504 vda5 253 16 209715200 vdb 11 0 1048575 sr0 11 1 434 sr1

# echo ',' | sfdisk /dev/vdb

# mkfs.ext4 /dev/vdb1

# mount /dev/vdb1 /mnt

# rsync -PHSav --exclude '/mnt/' --exclude '/proc/' --exclude '/sys/' / /mnt/

# mkdir -p /mnt/{proc,sys}

root@133-130-116-204:~# diff -u /etc/fstab /mnt/etc/fstab --- /etc/fstab 2015-05-05 12:44:51.200384000 +0900 +++ /mnt/etc/fstab 2015-11-21 00:05:38.196995183 +0900 @@ -6,6 +6,6 @@ # ## / was on /dev/vda1 during installation -UUID=24e7865b-dfab-4391-a110-514008616841 / ext4 errors=remount-ro 0 1 +/dev/vdb1 / ext4 errors=remount-ro 0 1 # swap was on /dev/vda5 during installation UUID=c2c8ba86-f869-4608-859a-b29e1e28b162 none swap sw 0 0

# sed -i -e "s|root=UUID=[0-9a-f-]*|root=/dev/vdb1|g" /boot/grub/grub.cfg

# df -h df: ‘/tmp/tmp8t5ABN’: No such file or directory Filesystem Size Used Avail Use% Mounted on /dev/vdb1 197G 1.6G 186G 1% / none 4.0K 0 4.0K 0% /sys/fs/cgroup udev 7.9G 12K 7.9G 1% /dev tmpfs 1.6G 400K 1.6G 1% /run none 5.0M 4.0K 5.0M 1% /run/lock none 7.9G 0 7.9G 0% /run/shm none 100M 0 100M 0% /run/user

+ blk_devices=vdb

+ for blk_dev in '${blk_devices}'

++ awk '/^\/dev\/vdb[0-9]* / {print $2}' /proc/mounts

+ mount_points=/

+ for mount_point in '${mount_points}'

+ umount /

umount: /: device is busy.

(In some cases useful info about processes that use

the device is found by lsof(8) or fuser(1))

blk_devices=$(lsblk -nrdo NAME,TYPE,RO | awk '/d[b-z]+ disk [^1]/ {print $1}')

for blk_dev in ${blk_devices}; do

# dismount any mount points on the device

mount_points=$(awk "/^\/dev\/${blk_dev}[0-9]* / {print \$2}" /proc/mounts)

for mount_point in ${mount_points}; do

umount ${mount_point}

sed -i ":${mount_point}:d" /etc/fstab

done

# diff -u ./scripts/scripts-library.sh.orig ./scripts/scripts-library.sh

--- ./scripts/scripts-library.sh.orig 2015-11-21 00:29:41.706223880 +0900

+++ ./scripts/scripts-library.sh 2015-11-21 00:29:57.538223880 +0900

@@ -81,7 +81,7 @@

# only do this if the lxc vg doesn't already exist

if ! vgs lxc > /dev/null 2>&1; then

- blk_devices=$(lsblk -nrdo NAME,TYPE,RO | awk '/d[b-z]+ disk [^1]/ {print $1}')

+ blk_devices=$(lsblk -nrdo NAME,TYPE,RO | awk '/d[c-z]+ disk [^1]/ {print $1}')

for blk_dev in ${blk_devices}; do

# dismount any mount points on the device

mount_points=$(awk "/^\/dev\/${blk_dev}[0-9]* / {print \$2}" /proc/mounts)

~ 30分後 ~

~ 30分後 ~

※4: http://wakame-vdc.org/

~ 30分後 ~

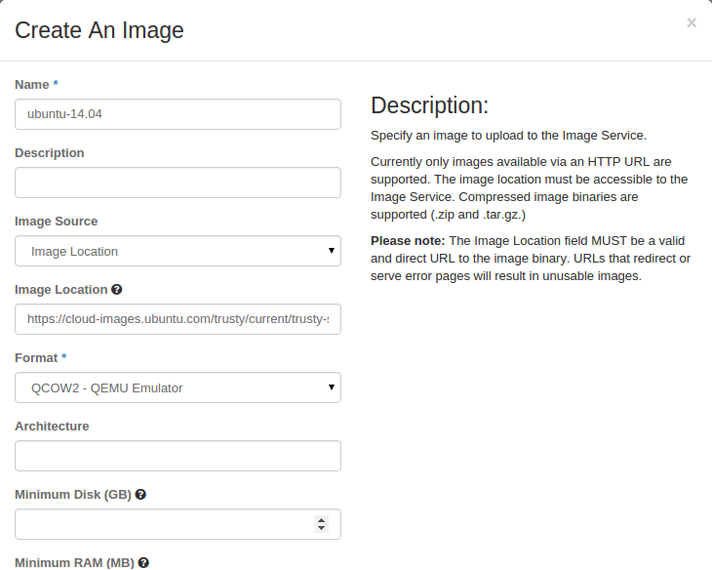

Name: ubuntu-14.04 Image Source: Image Location Image Location: https://cloud-images.ubuntu.com/trusty/current/trusty-server-cloudimg-amd64-disk1.img Format: QCOW2 Public: checked

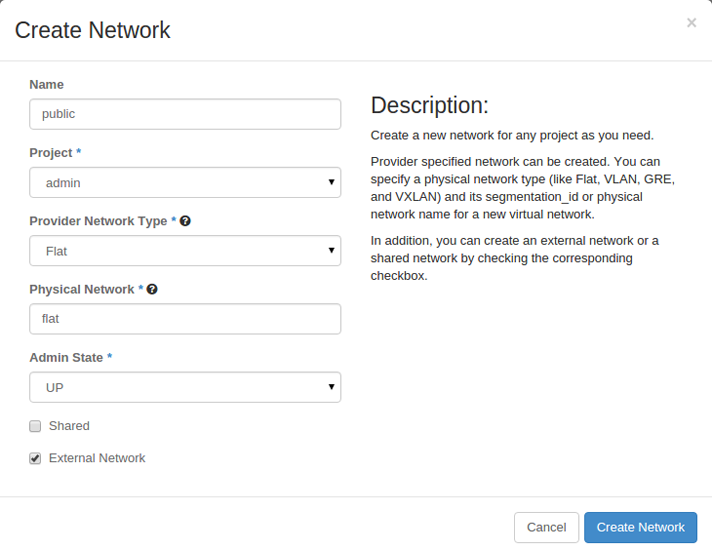

Name: public Project: admin Provider Network Type: Flat Physical Network: flat External Network: checked

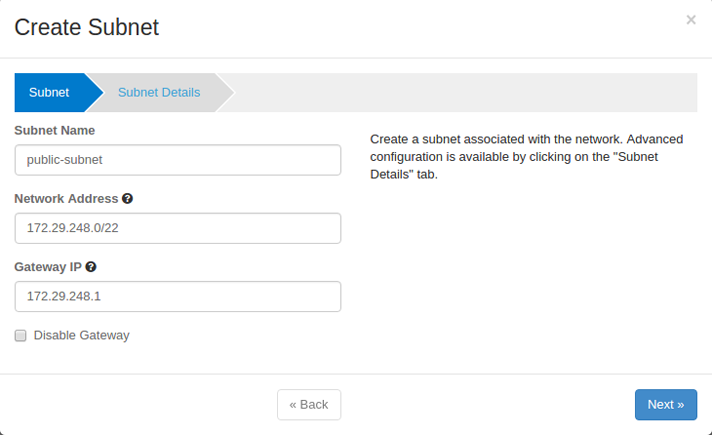

Subnet Name: public-subnet Network Address: 172.29.248.0/22 Gateway IP: 172.29.248.1

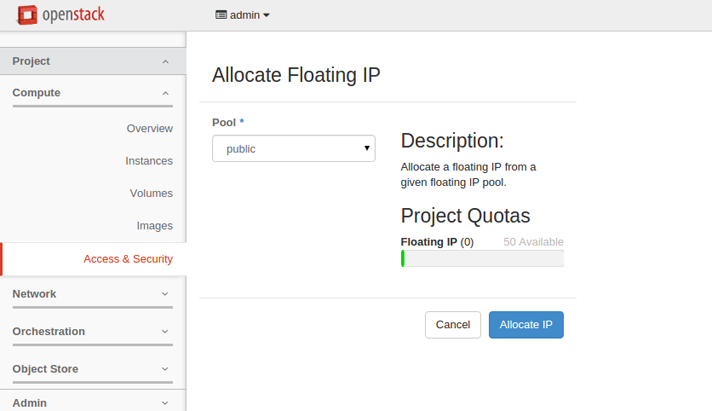

Enable DHCP: unchecked Allocation Pools: 172.29.248.200,172.29.248.240

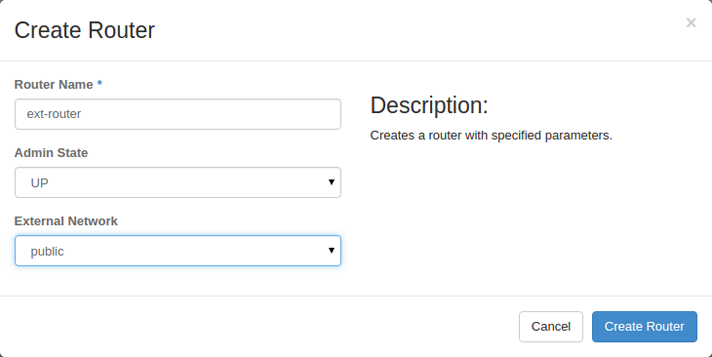

Router Name: ext-router External Network: public

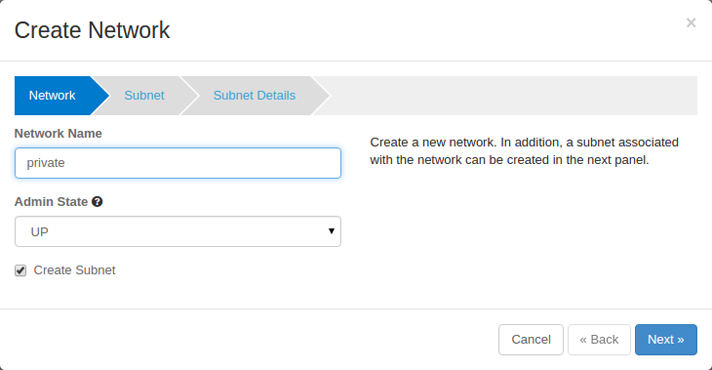

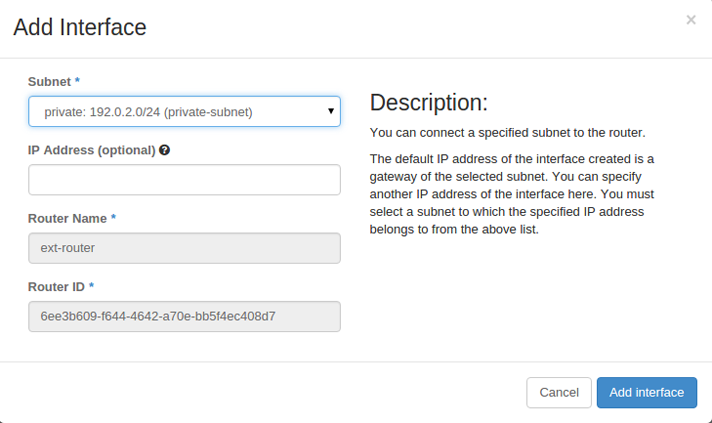

Network Name: private Subnet Name: private-subnet Network Address: 192.0.2.0/24 Gateway IP: 192.0.2.1

# lxc-attach --name `lxc-ls | grep utility_container` # source /root/openrc # nova keypair-add key1 > ~/key1.pem # chmod 400 ~/key1.pem

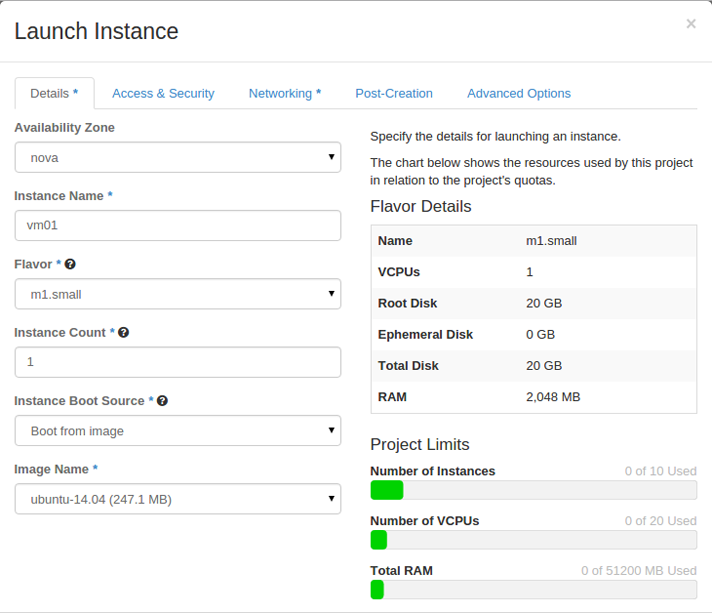

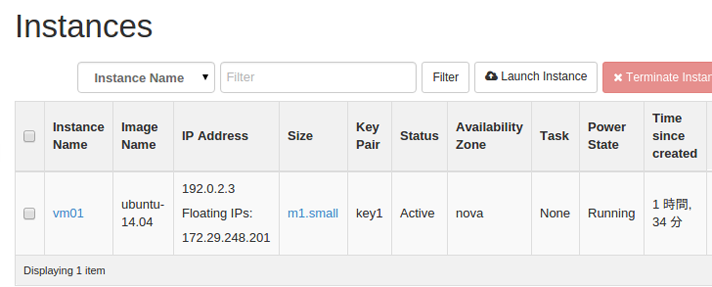

Instance Name: vm01 Flavor: m1.small Instance Boot Source: Boot from image Image Name: ubuntu-14.04 Security Groups: default Selected networks: private

Traceback (most recent call last):

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/compute/manager.py", line 2155, in _build_resources

yield resources

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/compute/manager.py", line 2009, in _build_and_run_instance

block_device_info=block_device_info)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 2441, in spawn

write_to_disk=True)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 4326, in _get_guest_xml

context)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 4175, in _get_guest_config

root_device_name)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3998, in _configure_guest_by_virt_type

guest.sysinfo = self._get_guest_config_sysinfo(instance)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3391, in _get_guest_config_sysinfo

sysinfo.system_serial = self._sysinfo_serial_func()

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3380, in _get_host_sysinfo_serial_auto

return self._get_host_sysinfo_serial_os()

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3374, in _get_host_sysinfo_serial_os

raise exception.NovaException(msg)

NovaException: Unable to get host UUID: /etc/machine-id is empty

※7: /openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py

def _get_host_sysinfo_serial_os(self):

"""Get a UUID from the host operating system

Get a UUID for the host operating system. Modern Linux

distros based on systemd provide a /etc/machine-id

file containing a UUID. This is also provided inside

systemd based containers and can be provided by other

init systems too, since it is just a plain text file.

"""

if not os.path.exists("/etc/machine-id"):

msg = _("Unable to get host UUID: /etc/machine-id does not exist")

raise exception.NovaException(msg)

with open("/etc/machine-id") as f:

# We want to have '-' in the right place

# so we parse & reformat the value

lines = f.read().split()

if not lines:

msg = _("Unable to get host UUID: /etc/machine-id is empty")

raise exception.NovaException(msg)

def _get_host_sysinfo_serial_auto(self):

if os.path.exists("/etc/machine-id"):

return self._get_host_sysinfo_serial_os()

else:

return self._get_host_sysinfo_serial_hardware()

Traceback (most recent call last): File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/compute/manager.py", line 2155, in _build_resources yield resources File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/compute/manager.py", line 2009, in _build_and_run_instance block_device_info=block_device_info) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 2444, in spawn block_device_info=block_device_info) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 4511, in _create_domain_and_network self.plug_vifs(instance, network_info) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 666, in plug_vifs self.vif_driver.plug(instance, vif) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/vif.py", line 729, in plug func(instance, vif) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/virt/libvirt/vif.py", line 485, in plug_bridge iface) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/oslo_concurrency/lockutils.py", line 254, in inner return f(*args, **kwargs) File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/network/linux_net.py", line 1612, in ensure_bridge raise exception.NovaException(msg) NovaException: Failed to add bridge: sudo: unable to resolve host 133-130-116-204

# nova service-list +----+------------------+----------------------------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+----------------------------------------+----------+---------+-------+----------------------------+-----------------+ | 3 | nova-cert | aio1_nova_cert_container-9f91ec5b | internal | enabled | up | 2015-11-25T18:44:07.000000 | - | | 6 | nova-conductor | aio1_nova_conductor_container-2a14684e | internal | enabled | up | 2015-11-25T18:44:08.000000 | - | | 9 | nova-scheduler | aio1_nova_scheduler_container-faa6144f | internal | enabled | up | 2015-11-25T18:44:11.000000 | - | | 12 | nova-consoleauth | aio1_nova_console_container-3a6309f1 | internal | enabled | up | 2015-11-25T18:44:06.000000 | - | | 15 | nova-compute | 133-130-116-204 | nova | enabled | down | 2015-11-25T18:41:38.000000 | - | | 18 | nova-compute | aio1 | nova | enabled | up | 2015-11-25T18:44:07.000000 | - | +----+------------------+----------------------------------------+----------+---------+-------+----------------------------+-----------------+

Instance failed network setup after 1 attempt(s)

Traceback (most recent call last):

File "/openstack/venvs/nova-12.0.1/lib/pytho

n2.7/site-packages/nova/compute/manager.py", line 1564, in _allocate_network_async

dhcp_options=dhcp_options)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 727, in allocate_for_instance

self._delete_ports(neutron, instance, created_port_ids)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/oslo_utils/excutils.py", line 195, in __exit__

six.reraise(self.type_, self.value, self.tb)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 719, in allocate_for_instance

security_group_ids, available_macs, dhcp_opts)

File "/openstack/venvs/nova-12.0.1/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 342, in _create_port

raise exception.PortBindingFailed(port_id=port_id)

PortBindingFailed: Binding failed for port e0baf507-5aac-489c-bfa4-f2f631b6cab5, please check neutron logs for more information.

~ 2時間後 ~

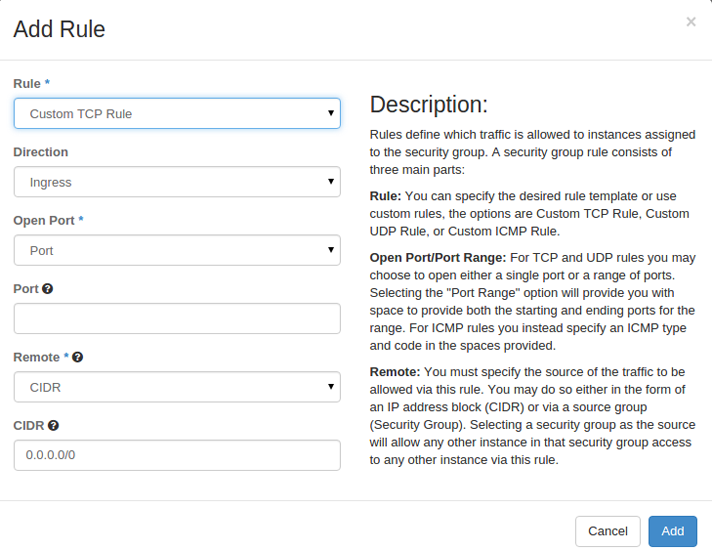

Rule: SSH Remote: CIDR CIDR: 0.0.0.0/0

# ssh -i ~/key1.pem ubuntu@172.29.248.201

Tweet